Parler

Parler Gab

Gab

- California's Assembly Bill 587 (AB 587), a controversial social media transparency law, has been significantly weakened by the courts. The ruling, following a legal challenge by Elon Musk's X (formerly Twitter), preserves only minimal reporting requirements.

- Enacted in 2022, AB 587 aimed to ensure "social media transparency" by mandating detailed biannual reports from large social media companies on their content moderation practices, including definitions of terms like "hate speech" and data on flagged or removed posts.

- The legal battle coincides with a broader trend toward community-driven content moderation, where users, rather than algorithms or corporate policies, flag and contextualize potentially harmful content.

- The court's decision highlights the tension between protecting free speech and addressing the real-world impact of harmful online content.

- The debate continues over how to balance free speech with online safety, emphasizing the need for thoughtful solutions in an era where misinformation can spread rapidly.

The law that sparked a First Amendment firestorm

AB 587, passed in 2022, was touted by California Governor Gavin Newsom and Attorney General Rob Bonta as a necessary step to ensure “social media transparency.” The law required large social media companies to submit detailed reports twice a year about their content moderation practices, including definitions of terms like “hate speech,” “disinformation,” and “extremism,” as well as data on how often posts were flagged or removed. However, critics argued that the law was a thinly veiled attempt to impose government censorship on private platforms. X Corp. (formerly Twitter) led the charge, filing a lawsuit claiming that AB 587 violated the First Amendment by compelling companies to disclose sensitive information about their moderation policies. The U.S. Court of Appeals for the Ninth Circuit agreed, issuing an injunction and ultimately leading to a settlement that stripped the law of its most contentious provisions. “Essentially, what was at issue was whether California could compel social media companies to detail how they defined and treated offensive and/or controversial content in several categories,” explained Tamara Zellers Buck, a mass media professor at Southeast Missouri State University. “The courts viewed this as an overreaching, content-based restriction on speech.”A shift toward community-driven moderation

The legal battle over AB 587 comes amid a broader shift in how social media platforms handle content moderation. Since Elon Musk’s acquisition of Twitter in 2022, the platform has embraced a community-driven model, where users—not algorithms or corporate gatekeepers—flag and contextualize potentially harmful content. This approach, exemplified by X’s “Community Notes” feature, has been adopted by other platforms, including Meta’s Facebook and Instagram. Rob Lalka, a professor at Tulane University and author of The Venture Alchemists, noted that this shift reduces the financial and operational burden on companies but raises critical questions about accountability. “By shifting responsibility for monitoring and enforcing policies on harmful content from companies to users, the risks of misinformation, harassment and extremism become more diffused—and potentially more difficult to contain,” Lalka warned. The debate over community-driven moderation echoes a century-old argument made by former Supreme Court Justice Louis Brandeis, who famously wrote, “If there be time to expose through discussion the falsehood and fallacies, to avert the evil by the processes of education, the remedy to be applied is more speech, not enforced silence.” Proponents of this approach argue that decentralized fact-checking fosters a more open and balanced exchange of ideas. Critics, however, fear it could lead to unchecked misinformation and extremism.The real-world consequences of online speech

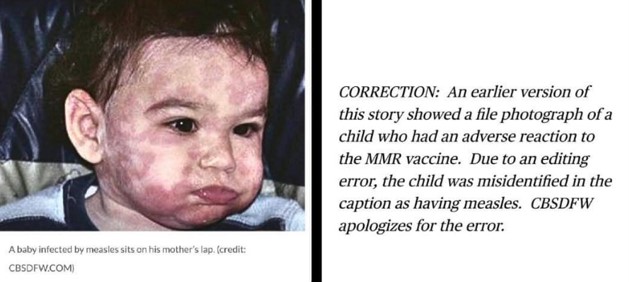

While the court’s decision is a win for free speech advocates, it also highlights the growing tension between protecting constitutional rights and addressing the real-world consequences of harmful online content. As Lalka pointed out, “What spreads on computers and phones does not remain confined to the digital world.” From the genocide in Myanmar to the January 6th insurrection at the U.S. Capitol, the amplification of extremist ideologies and disinformation has had devastating effects. The stakes are particularly high for children, who are often the most vulnerable to online harassment, exploitation and exposure to harmful content. Without clear guidelines or oversight, critics argue, social media platforms could become breeding grounds for abuse. “Disinformation increases with exposure, and certainly, that’s a possible result if content that is offensive and wrong isn’t removed,” said Buck. “However, there’s a difference between offensiveness and wrongness, and we can’t try to regulate on the assumption they are the same.”What’s next for social media regulation?

The gutting of AB 587 leaves California with only minimal reporting requirements, such as submitting terms of service twice a year and detailing any changes. While this preserves the autonomy of social media companies, it also leaves a regulatory vacuum that lawmakers are eager to fill. Assemblymember Jesse Gabriel, the Democrat who authored AB 587, expressed disappointment with the outcome but vowed to continue pushing for legislation to protect communities. “I look forward to working closely with my colleagues as we consider additional legislation to protect our communities,” he said in a statement. As the debate over social media regulation continues, one thing is clear: the challenge lies in striking a balance between protecting free speech and ensuring online safety. In an era where misinformation spreads faster than ever—thanks in part to advances in AI and the viral nature of social media—the need for thoughtful, nuanced solutions has never been greater. Sources include: InfoWars.com Forbes.com Yahoo.comZach Vorhies: Google whistleblower predicts the decline of Google’s influence

By Finn Heartley // Share

The war on RFK Jr.: Why they’re desperate to silence him

By News Editors // Share

Idaho’s dangerous gamble: Turning lies into felonies and the threat to free speech

By Willow Tohi // Share

New Zealand’s internet shake-up: Free speech fears amid “Te Tiriti” domain rule overhaul

By Willow Tohi // Share

Democrats Accused of Reading From the Same Script to Criticize Trump’s Speech

By Finn Heartley // Share

Zach Vorhies: Google whistleblower predicts the decline of Google's influence

By finnheartley // Share

EPA chief faces scrutiny over $7 billion climate fund allegations

By finnheartley // Share

Ron Paul was right: Now GOP lawmakers are pushing to ABOLISH the Federal Reserve

By ljdevon // Share

Florida takes a stand: DeSantis proposes permanent ban on mRNA vaccine mandates

By willowt // Share