Parler

Parler Gab

Gab

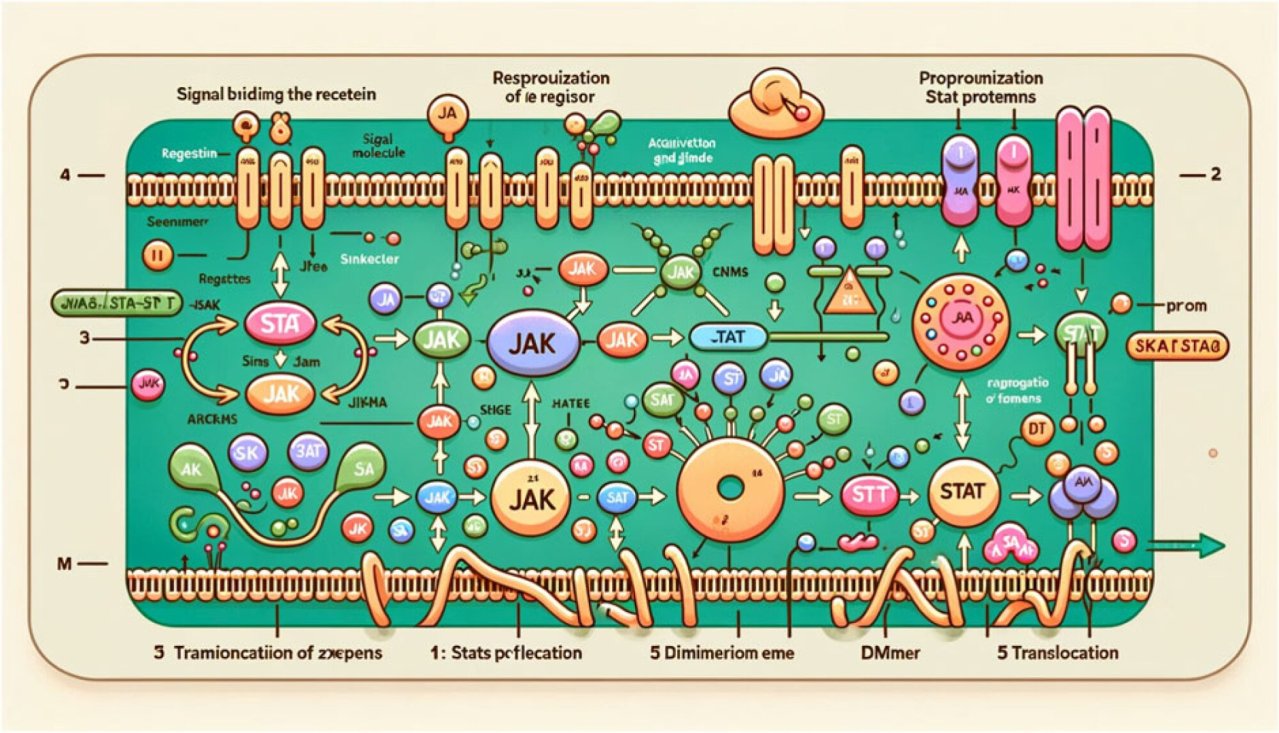

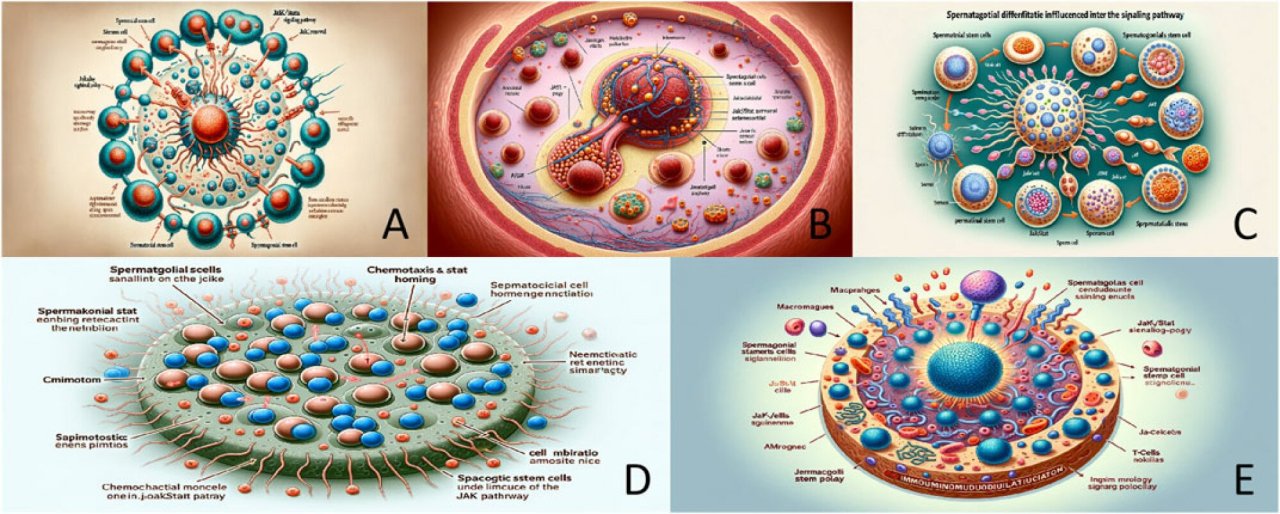

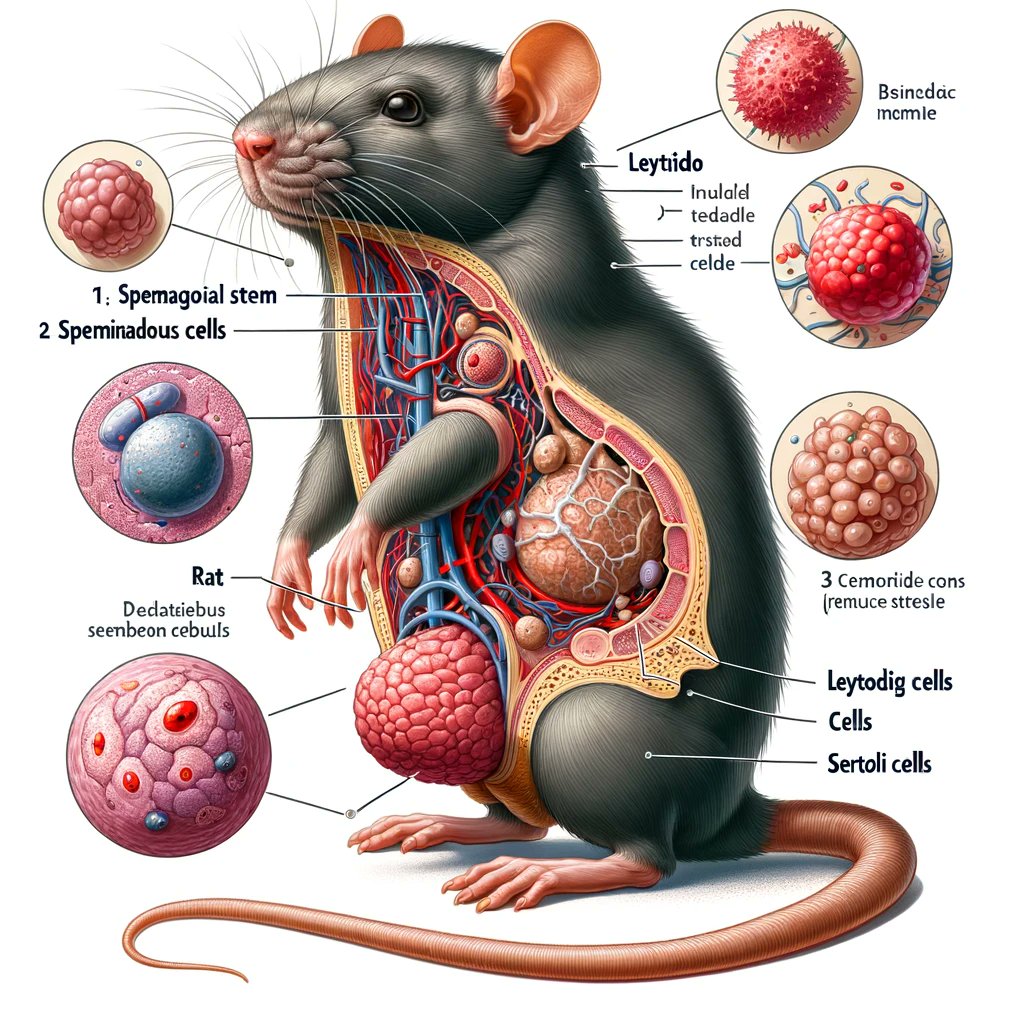

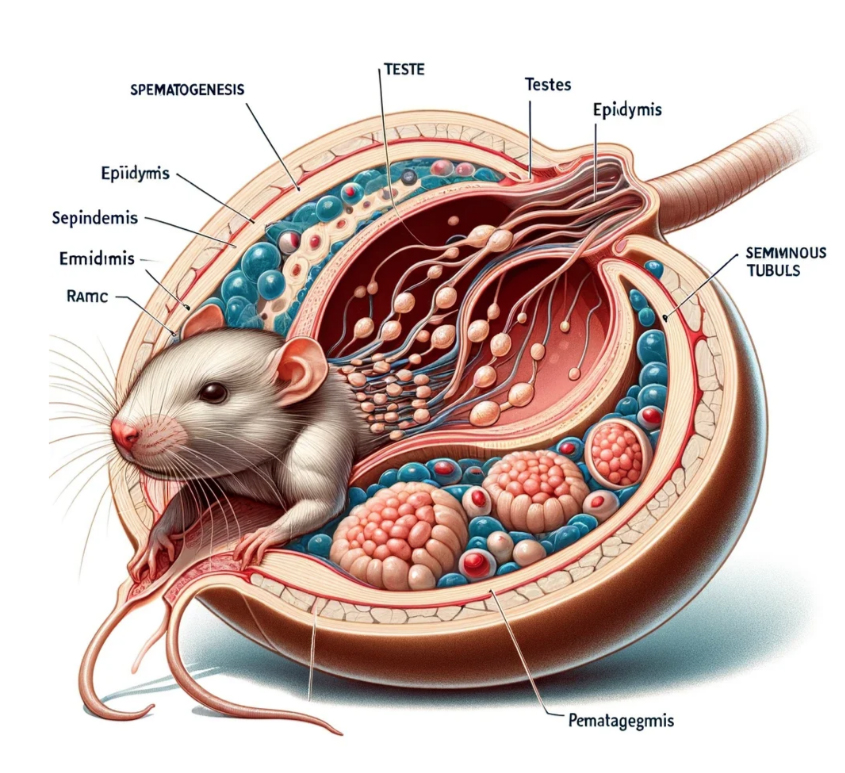

Subsequent images in the paper also displayed terms and biological systems that simply are not real.Erm, how did Figure 1 get past a peer reviewer?! https://t.co/pAkRmfuVed H/T @aero_annapic.twitter.com/iXpZ1FvM1G

— Dr CJ Houldcroft ?️ (@DrCJ_Houldcroft) February 15, 2024

Remarkably, the paper found its way into the journal, where it was read by scientists who immediately recognised the images and descriptions were not grounded in “any known biology”.

The journal retracted the paper, issued an apology and announced that it is working to “correct the record”.

Adrian Liston, professor of pathology at Cambridge University and editor of the journal Immunology & Cell Biology warned of the dangers of AI being used to create scientific diagrams, noting “Generative AI is very good at making things that sound like they come from a human being. It doesn’t check whether those things are correct.”

“It is like an actor playing a doctor on a TV show – they look like a doctor, they sound like a doctor, they even use words that a doctor would use. But you wouldn’t want to get medical advice from the actor,” he further noted, warning that “The problem for real journals is getting harder, because generative AI makes it easier for cheats.”

“It used to be really obvious to tell cheat papers at a glance. It is getting harder, and a lot of people in scientific publishing are getting genuinely concerned that we will reach a tipping point where we won’t be able to manually tell whether an article is genuine or a fraud,” Liston further cautioned.

People have since been creating their own AI rat images, in an attempt to work out what the Chinese ‘researchers’ typed into Midjourney to make the images that got published.

Remarkably, the paper found its way into the journal, where it was read by scientists who immediately recognised the images and descriptions were not grounded in “any known biology”.

The journal retracted the paper, issued an apology and announced that it is working to “correct the record”.

Adrian Liston, professor of pathology at Cambridge University and editor of the journal Immunology & Cell Biology warned of the dangers of AI being used to create scientific diagrams, noting “Generative AI is very good at making things that sound like they come from a human being. It doesn’t check whether those things are correct.”

“It is like an actor playing a doctor on a TV show – they look like a doctor, they sound like a doctor, they even use words that a doctor would use. But you wouldn’t want to get medical advice from the actor,” he further noted, warning that “The problem for real journals is getting harder, because generative AI makes it easier for cheats.”

“It used to be really obvious to tell cheat papers at a glance. It is getting harder, and a lot of people in scientific publishing are getting genuinely concerned that we will reach a tipping point where we won’t be able to manually tell whether an article is genuine or a fraud,” Liston further cautioned.

People have since been creating their own AI rat images, in an attempt to work out what the Chinese ‘researchers’ typed into Midjourney to make the images that got published.

Read more at: Modernity.news

Read more at: Modernity.news

War on Gaza: Israeli ‘massacre’ kills over 100 Palestinians seeking food in Gaza City

By News Editors // Share

Bannon threatens Fox, implies Murdoch purchased network illegally

By News Editors // Share

Fire Jens Stoltenberg now before it is too late

By News Editors // Share

Governments continue to obscure COVID-19 vaccine data amid rising concerns over excess deaths

By patricklewis // Share

Tech giant Microsoft backs EXTINCTION with its support of carbon capture programs

By ramontomeydw // Share

Germany to resume arms exports to Israel despite repeated ceasefire violations

By isabelle // Share